Physical Computing

When you see an interactive work, don't you wonder how information was detected, delivered, processed, and output or feedback to the viewers?

Physical Computing uses electronics to prototype new materials for designers and artists.

Physical Computing is an approach to computer-human interaction design that starts by considering how humans express themselves physically. In physical computing, we take the human body and its capabilities as the starting point and attempt to design interfaces, both software and hardware, that can sense and respond to what humans can physically do.

Communications in Physical Computing:

sensing signals-> computing and sending commands -> and executing.

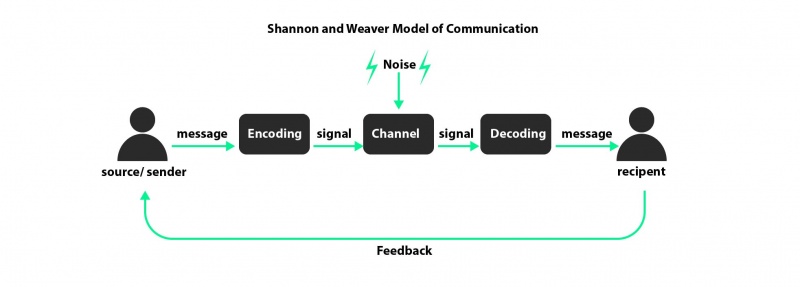

The Shannon and Weaver Model of Communication

It is known as the “mother of all models” because of its wide popularity. The model is also known as ‘information theory’. The Shannon and Weaver Model named after: Claude Shannon was a mathematician from the United States. Warren Weaver was an electrical engineer from the United States. The Shannon-Weaver theory was first proposed in 1948, and this model still applicable today.

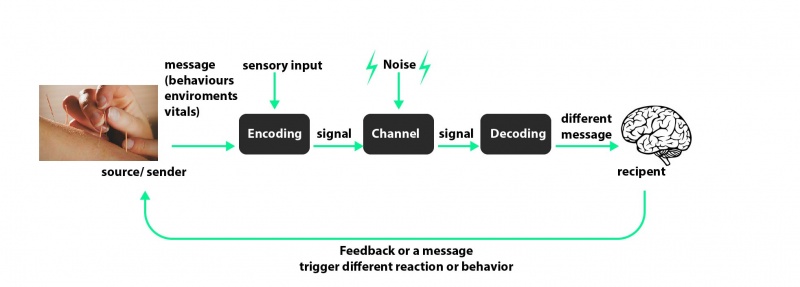

and this kind of communication is very familiar to us:

For example, we use our ear to listen and then sending signals to our brain to decode and computing what's the responses

and send the command to our vocal cord to output the responses.

if we duplicate what happened above in physical computing it would look like this:

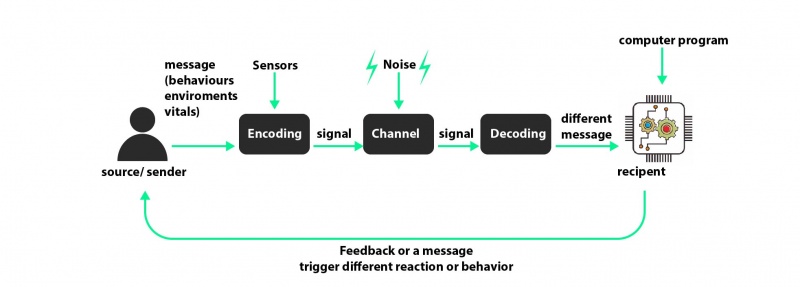

I use the mic to detecting the overall sound volume it is too loud I will start beeping via a speaker.

Let's break it down:

first, the mic converts the air vibrations into electric pulses --> send to microcontrollers -->

where we program it to send out electrical pulses to the speaker if the received electric signal from the mic reaches certain limits

--> the speaker converts the electrical pulses into a beep.

and a lot of sensors are operating at the same principle:

for example, a light sensor LDR(light-dependent resistor) decreases electrical resistance with respect to receiving light on the component's sensitive surface.

and a button can close the circuit to let electricity float through or open the circuit to disconnect.

to sum up:

in order to create a physical interaction, we need to have an understanding of how a computer can sense physical action.

When we act, we cause changes in various forms of energy.

Speech generates the air pressure waves that are sound. Gestures change the flow of light and heat in a space.

Electronic sensors can convert these energy changes into changing electronic signals that can be read and interpreted by computers.

In physical computing, we learn how to connect sensors to the simplest of computers, called microcontrollers,

in order to read these changes and interpret them as actions.

Finally, we learn how microcontrollers communicate with other computers in order to connect physical action with multimedia displays.