Difference between revisions of "Motion recognition for the Arduino"

| Line 44: | Line 44: | ||

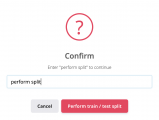

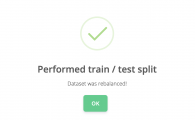

So far we've collected about 5 minutes of training data for each gesture we want the ML program to learn. To train a good performing ML program, we also need some test data. Test data is data that the ML program will never see. We will use test data to verify if the ML program is also able to detect gestures it hasn't seen in the training process. To automatically divide all your training data into ''train'' and ''test'', click the ⚠️ exclamation mark icon ⚠️. A pop-up window will open and suggests you to divide your data into a 80/20 ratio. Click on the <span style="color: rgb(150, 0, 0)">[Perform train / test split]</span> button, the <span style="color: rgb(150, 0, 0)">[Yes, perform train / test split]</span> button and finally type ''"perform split"'', followed by pressing the <span style="color: rgb(150, 0, 0)">[perform train / test split]</span> button. | So far we've collected about 5 minutes of training data for each gesture we want the ML program to learn. To train a good performing ML program, we also need some test data. Test data is data that the ML program will never see. We will use test data to verify if the ML program is also able to detect gestures it hasn't seen in the training process. To automatically divide all your training data into ''train'' and ''test'', click the ⚠️ exclamation mark icon ⚠️. A pop-up window will open and suggests you to divide your data into a 80/20 ratio. Click on the <span style="color: rgb(150, 0, 0)">[Perform train / test split]</span> button, the <span style="color: rgb(150, 0, 0)">[Yes, perform train / test split]</span> button and finally type ''"perform split"'', followed by pressing the <span style="color: rgb(150, 0, 0)">[perform train / test split]</span> button. | ||

| − | <gallery widths=" | + | <gallery widths="225px"> |

File:Edge-pre-split1.png|Step 1: Find the ⚠️ | File:Edge-pre-split1.png|Step 1: Find the ⚠️ | ||

File:Edge-pre-split2.png|Step 2: Click the ⚠️ | File:Edge-pre-split2.png|Step 2: Click the ⚠️ | ||

Revision as of 21:51, 24 March 2022

Intro

Edge Impulse is an free online service where you can build machine learning models to recognize certain patterns using sensor data. Think of audio, images and in case of this tutorial, motion (accelerometer). Machine learning (ML) is a way of writing computer programs. Specifically, it's a way of writing programs that process raw data and turn it into information that is useful for a specific purpose. Unlike normal computer programs, the rules of ML programs are not determined by a human programmer. Instead, ML uses specialized algorithms (sequence of instructions) to learn from data in a process known as training.

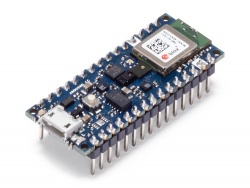

In this tutorial we will be using Edge Impulse to train a ML program to recognize gestures that you will define using the accelerometer in your mobile phone. After successfully training the ML program on your gestures, we can then generate code for the Arduino Nano 33 BLE Sense. This will allow you to perform your trained gestures with the Arduino while, at the same time, the ML program on the Arduino tries to detect what gesture is performed.

Getting Started

To train a ML program on your gestures you'll need:

- Computer with access to the internet (Firefox or Google Chrome)

- Mobile phone with an accelerometer sensor and an app to scan a QR code

- An account on Edge Impulse

To get your trained ML program on the Arduino you'll need:

- An Arduino Nano 33 BLE Sense

- Micro-USB to USB cable

Training a ML motion recognition program

Creating a project in Edge Impulse

After creating an account and logging in on Edge Impulse, click on your user profile in the top right corner followed by Create new project'. This will open a new window where you can give a name to your project. After naming your project, click on the green [Create new Project] button to create your project. Edge Impulse will ask you what kind of sensor data you would like to collect. Since we're going to use the accelerometer on our mobile phone, click on 'Accelerometer data', followed by the green [Let's get started!] button.

Connecting your mobile phone

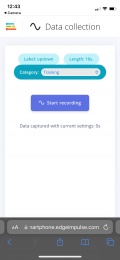

We will be using our mobile phones to collect accelerometer (gesture) data. That means we need a way to record data and send it over to Edge Impulse. To connect your phone within Edge Impulse, click on the tab 'Devices'. While on the new page click on the 'Connect new device' button in the top right corner, followed by 'Show QR code'. Now is the time to grab your mobile phone and use your camera or a QR code scanner app (e.g. Google Lens) and scan the QR shown on the screen. When done successfully you should see a green ✅ check mark ✅. After seeing the check mark, click on [Collecting motion?] to collect accelerometer data. Now we have to label the gesture we are going to collect. I'll start with a basic up and down gesture so my label will be 'updown'. For the length it's recommended to pick 10 seconds because we will record the up and down gesture in a continuous motion (you don't want to do this for minutes, trust me). As for the category we will pick 'Training'. When all these options are set, we're ready to collect data!

Collecting data

To start collecting accelerometer data (the up down gesture), click on the blue [Start recording] button. Immediately after clicking the button start performing your gesture for 10 seconds (or the amount of time you've set in the options). After the 10 seconds are up you should see the recorded gesture in the 'Data acquisition' tab within Edge Impulse. If this is not the case, try to connect your mobile phone again using the steps before.

Now your data is successfully being picked up by Edge Impulse, it is time to record more variations of the up down gesture. It is recommended to record about 5 minutes of accelerometer data for a single gesture. It is best to ask different people to record this gesture for you, as everyone performs this gesture slightly different. This means your ML program will perform better after training.

When the you've collected about 5 minutes of data for a specific gesture, try to think other gestures and record these as well (don't forget to label them accordingly!). Edge Impulse allows you to record 4 hours of data, so you can add many gestures to your ML program.

Training & test data

So far we've collected about 5 minutes of training data for each gesture we want the ML program to learn. To train a good performing ML program, we also need some test data. Test data is data that the ML program will never see. We will use test data to verify if the ML program is also able to detect gestures it hasn't seen in the training process. To automatically divide all your training data into train and test, click the ⚠️ exclamation mark icon ⚠️. A pop-up window will open and suggests you to divide your data into a 80/20 ratio. Click on the [Perform train / test split] button, the [Yes, perform train / test split] button and finally type "perform split", followed by pressing the [perform train / test split] button.