Difference between revisions of "SaF"

Jump to navigation

Jump to search

(Created page with "1. Installing Pip (package management system recommended for installing Python packages) -> [https://pip.pypa.io/en/latest/installing.html Click here] 2. Installing all the l...") |

|||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| + | <div style='width:70%'> | ||

1. Installing Pip (package management system recommended for installing Python packages) -> [https://pip.pypa.io/en/latest/installing.html Click here] | 1. Installing Pip (package management system recommended for installing Python packages) -> [https://pip.pypa.io/en/latest/installing.html Click here] | ||

| − | 2. Installing all the libraries you need | + | 2. Installing all the libraries you need . After having installed pip,enter "sudo pip install nameOfpackage" in your command line. To verify if a package is previously installed, type enter "python" on your command line. Then enter "import nameOfpackage". If no error messages are displayed, congratulations, the package is installed! |

Some useful links for the Python libraries used during the workshop: | Some useful links for the Python libraries used during the workshop: | ||

| Line 9: | Line 10: | ||

*https://docs.python.org/2/library/urllib2.html | *https://docs.python.org/2/library/urllib2.html | ||

*http://pymotw.com/2/urlparse/ | *http://pymotw.com/2/urlparse/ | ||

| + | |||

| + | |||

| + | |||

| + | '''PRACTICAL EXAMPLE''' | ||

| + | |||

| + | |||

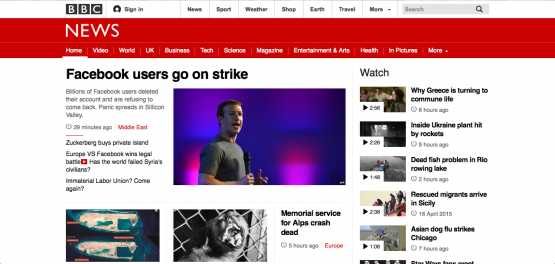

| + | [[File:scrapeandfake.png | 555px]] | ||

| + | |||

| + | |||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | #all the libraries we need! | ||

| + | #for installing htmllib, use sudo -H pip install html5lib==1.0b8 | ||

| + | import html5lib, urlparse | ||

| + | from urlparse import urljoin, urldefrag | ||

| + | import urllib2 | ||

| + | from urllib2 import urlopen | ||

| + | from xml.etree import ElementTree as ET | ||

| + | import time | ||

| + | |||

| + | #here you choose which website you want to tinker with | ||

| + | url = 'http://www.bbc.com/news/' | ||

| + | #the html file is opened here, you can name it whatever you want | ||

| + | culturalJam = open('replacebbc.html', 'w') | ||

| + | txdata = None | ||

| + | # faking a user agent to access information from websites that prevent automated browsing | ||

| + | txheaders = {'User-agent' : 'Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)'} | ||

| + | req = urllib2.Request(url,txdata, txheaders) | ||

| + | |||

| + | |||

| + | f = urlopen(req) | ||

| + | #it returns an xml.etree element instance - that is, the hierarchical xml \ | ||

| + | structure (in this case html) of the document we are going to analyse | ||

| + | parsed = html5lib.parse(f, namespaceHTMLElements=False) | ||

| + | #let us start looking for the elements we want to change in the future! | ||

| + | #lists all the sources, independently of their tag | ||

| + | sources = parsed.findall(".//*[@src]") | ||

| + | #lists all the hrefs, independently of their tag | ||

| + | hrefs = parsed.findall(".//*[@href]") | ||

| + | #lists all the spans's inside the first h3 it finds | ||

| + | title = parsed.find(".//h3/span") | ||

| + | #lists the first paragraph within the element whose id is 'albatross__body' | ||

| + | parag = parsed.find(".//*[@class='buzzard__body']/p") | ||

| + | #lists all the anchors within list tags within the element whose class \ | ||

| + | is 'links-list__list' | ||

| + | related = parsed.findall (".//*[@class='links-list__list']/li/a") | ||

| + | #lists the first image within the element whose id is 'albatross__image' | ||

| + | image = parsed.find(".//*[@class='buzzard__image']/div/img") | ||

| + | #changes happen here \o/ | ||

| + | title.text = 'Facebook users go on strike' | ||

| + | parag.text = 'Billions of Facebook users deleted their account and are \ | ||

| + | refusing to come back. Panic spreads in Sillicon Valley.' | ||

| + | related[0].text = "Zuckerberg buys private island" | ||

| + | related[1].text = "Europe VS Facebook wins legal battle" | ||

| + | related[2].text = "Immaterial Labor Union? Come again?" | ||

| + | related[3].text = "Obama talks Twitter and Instagram" | ||

| + | image.attrib['src'] = "http://news.bbcimg.co.uk/media/images/78103000/jpg/_78103509_mark.jpg" | ||

| + | #both these blocks make all the links within the page absolute, so we won't \ | ||

| + | lose any information | ||

| + | for src in sources: | ||

| + | if not src.attrib.get('src').startswith('http'): | ||

| + | lala = src.attrib.get('src') | ||

| + | absolutize = urlparse.urljoin(url, lala) | ||

| + | src.attrib['src'] = absolutize | ||

| + | |||

| + | for href in hrefs: | ||

| + | if not href.attrib.get('href').startswith('http'): | ||

| + | lolo = href.attrib.get('href') | ||

| + | absoluting = urlparse.urljoin(url, lolo) | ||

| + | href.attrib['href'] = absoluting | ||

| + | #write everything, including the changes to the element tree, to the \ | ||

| + | html file we opened earlier! | ||

| + | culturalJam.write(ET.tostring(parsed, method='html')) | ||

| + | culturalJam.close() | ||

| + | |||

| + | </syntaxhighlight> | ||

| + | |||

| + | </div> | ||

| + | |||

| + | [[Category:Hacking]] | ||

Latest revision as of 15:36, 21 November 2022

1. Installing Pip (package management system recommended for installing Python packages) -> Click here

2. Installing all the libraries you need . After having installed pip,enter "sudo pip install nameOfpackage" in your command line. To verify if a package is previously installed, type enter "python" on your command line. Then enter "import nameOfpackage". If no error messages are displayed, congratulations, the package is installed!

Some useful links for the Python libraries used during the workshop:

- http://edward.oconnor.cx/2009/08/djangosd-html5lib#title

- https://pypi.python.org/pypi/html5lib

- http://www.pythonforbeginners.com/python-on-the-web/how-to-use-urllib2-in-python/

- https://docs.python.org/2/library/urllib2.html

- http://pymotw.com/2/urlparse/

PRACTICAL EXAMPLE

#all the libraries we need!

#for installing htmllib, use sudo -H pip install html5lib==1.0b8

import html5lib, urlparse

from urlparse import urljoin, urldefrag

import urllib2

from urllib2 import urlopen

from xml.etree import ElementTree as ET

import time

#here you choose which website you want to tinker with

url = 'http://www.bbc.com/news/'

#the html file is opened here, you can name it whatever you want

culturalJam = open('replacebbc.html', 'w')

txdata = None

# faking a user agent to access information from websites that prevent automated browsing

txheaders = {'User-agent' : 'Mozilla/4.0 (compatible; MSIE 5.5; Windows NT)'}

req = urllib2.Request(url,txdata, txheaders)

f = urlopen(req)

#it returns an xml.etree element instance - that is, the hierarchical xml \

structure (in this case html) of the document we are going to analyse

parsed = html5lib.parse(f, namespaceHTMLElements=False)

#let us start looking for the elements we want to change in the future!

#lists all the sources, independently of their tag

sources = parsed.findall(".//*[@src]")

#lists all the hrefs, independently of their tag

hrefs = parsed.findall(".//*[@href]")

#lists all the spans's inside the first h3 it finds

title = parsed.find(".//h3/span")

#lists the first paragraph within the element whose id is 'albatross__body'

parag = parsed.find(".//*[@class='buzzard__body']/p")

#lists all the anchors within list tags within the element whose class \

is 'links-list__list'

related = parsed.findall (".//*[@class='links-list__list']/li/a")

#lists the first image within the element whose id is 'albatross__image'

image = parsed.find(".//*[@class='buzzard__image']/div/img")

#changes happen here \o/

title.text = 'Facebook users go on strike'

parag.text = 'Billions of Facebook users deleted their account and are \

refusing to come back. Panic spreads in Sillicon Valley.'

related[0].text = "Zuckerberg buys private island"

related[1].text = "Europe VS Facebook wins legal battle"

related[2].text = "Immaterial Labor Union? Come again?"

related[3].text = "Obama talks Twitter and Instagram"

image.attrib['src'] = "http://news.bbcimg.co.uk/media/images/78103000/jpg/_78103509_mark.jpg"

#both these blocks make all the links within the page absolute, so we won't \

lose any information

for src in sources:

if not src.attrib.get('src').startswith('http'):

lala = src.attrib.get('src')

absolutize = urlparse.urljoin(url, lala)

src.attrib['src'] = absolutize

for href in hrefs:

if not href.attrib.get('href').startswith('http'):

lolo = href.attrib.get('href')

absoluting = urlparse.urljoin(url, lolo)

href.attrib['href'] = absoluting

#write everything, including the changes to the element tree, to the \

html file we opened earlier!

culturalJam.write(ET.tostring(parsed, method='html'))

culturalJam.close()