Difference between revisions of "Motion recognition for the Arduino"

| Line 12: | Line 12: | ||

* Computer with access to the internet (Firefox or Google Chrome) | * Computer with access to the internet (Firefox or Google Chrome) | ||

* Mobile phone with an accelerometer sensor and an app to scan a QR code | * Mobile phone with an accelerometer sensor and an app to scan a QR code | ||

| − | * An account on [ | + | * An account on [https://studio.edgeimpulse.com/signup Edge Impulse] |

Revision as of 11:11, 24 March 2022

Intro

Edge Impulse is an free online service where you can build machine learning models to recognize certain patterns using sensor data. Think of audio, images and in case of this tutorial, motion (accelerometer). Machine learning (ML) is a way of writing computer programs. Specifically, it's a way of writing programs that process raw data and turn it into information that is useful for a specific purpose. Unlike normal computer programs, the rules of ML programs are not determined by a human programmer. Instead, ML uses specialized algorithms (sequence of instructions) to learn from data in a process known as training.

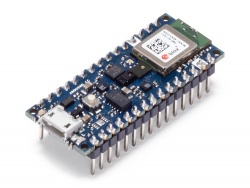

In this tutorial we will be using Edge Impulse to train a ML program to recognize gestures that you will define using the accelerometer in your mobile phone. After successfully training the ML program on your gestures, we can then generate code for the Arduino Nano 33 BLE Sense. This will allow you to perform your trained gestures with the Arduino while, at the same time, the ML program on the Arduino tries to detect what gesture is performed.

Getting Started

To train a ML program on your gestures you'll need:

- Computer with access to the internet (Firefox or Google Chrome)

- Mobile phone with an accelerometer sensor and an app to scan a QR code

- An account on Edge Impulse